|

Lukas Höllein

I'm a PhD student at the Visual Computing & Artificial Intelligence Lab at the Technical University of Munich, supervised by Prof. Dr. Matthias Nießner.

Email / Google Scholar / Twitter / Github / CV |

|

Research |

|

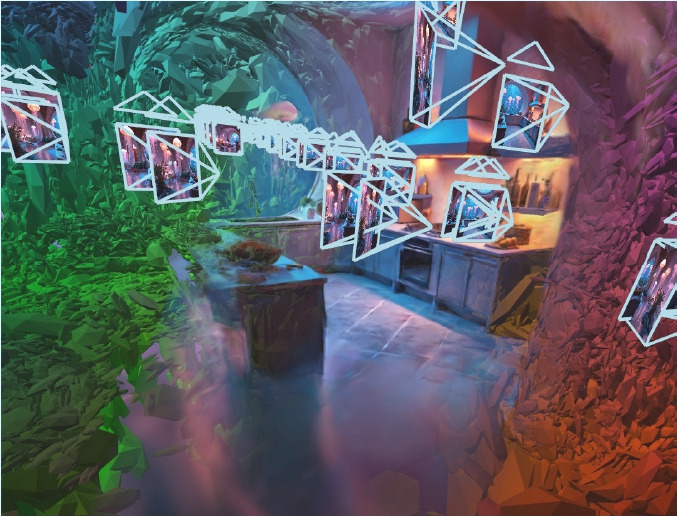

WorldExplorer: Towards Generating Fully Navigable 3D Scenes

Manuel-Andreas Schneider*, Lukas Höllein*, Matthias Nießner SIGGRAPH Asia, 2025 project page / arXiv / video / code WorldExplorer generates 3D scenes from a given text prompt using camera-guided video diffusion models. |

|

IntrinsiX: High-Quality PBR Generation using Image Priors

Peter Kocsis, Lukas Höllein, Matthias Nießner NeurIPS, 2025 project page / arXiv / video / code IntrinsiX generates high-quality intrinsic images from text descriptions. The decomposed image prior can be used for various applications, such as re-lighting, material editing, and texture generation. |

|

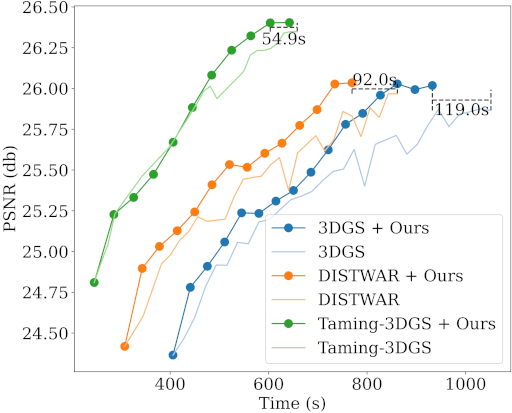

3DGS-LM: Faster Gaussian-Splatting Optimization with Levenberg-Marquardt

Lukas Höllein, Aljaž Božič, Michael Zollhöfer, Matthias Nießner, ICCV, 2025 project page / arXiv / video / code 3DGS-LM accelerates Gaussian-Splatting optimization by replacing the ADAM optimizer with Levenberg-Marquardt. |

|

QuickSplat: Fast 3D Surface Reconstruction via Learned Gaussian Initialization

Yueh-Cheng Liu, Lukas Höllein, Matthias Nießner, Angela Dai ICCV, 2025 project page / arXiv / video QuickSplat learns data-driven priors to generate dense initializations for 2D gaussian splatting optimization of large-scale indoor scenes. We further learn to jointly estimate the densification and update of the scene parameters during each iteration. |

|

ViewDiff: 3D-Consistent Image Generation with Text-to-Image Models

Lukas Höllein, Aljaž Božič, Norman Müller, David Novotny, Hung-Yu Tseng, Christian Richardt, Michael Zollhöfer, Matthias Nießner CVPR, 2024 project page / arXiv / video / code ViewDiff generates high-quality, multi-view consistent images of a real-world 3D object in authentic surroundings. |

|

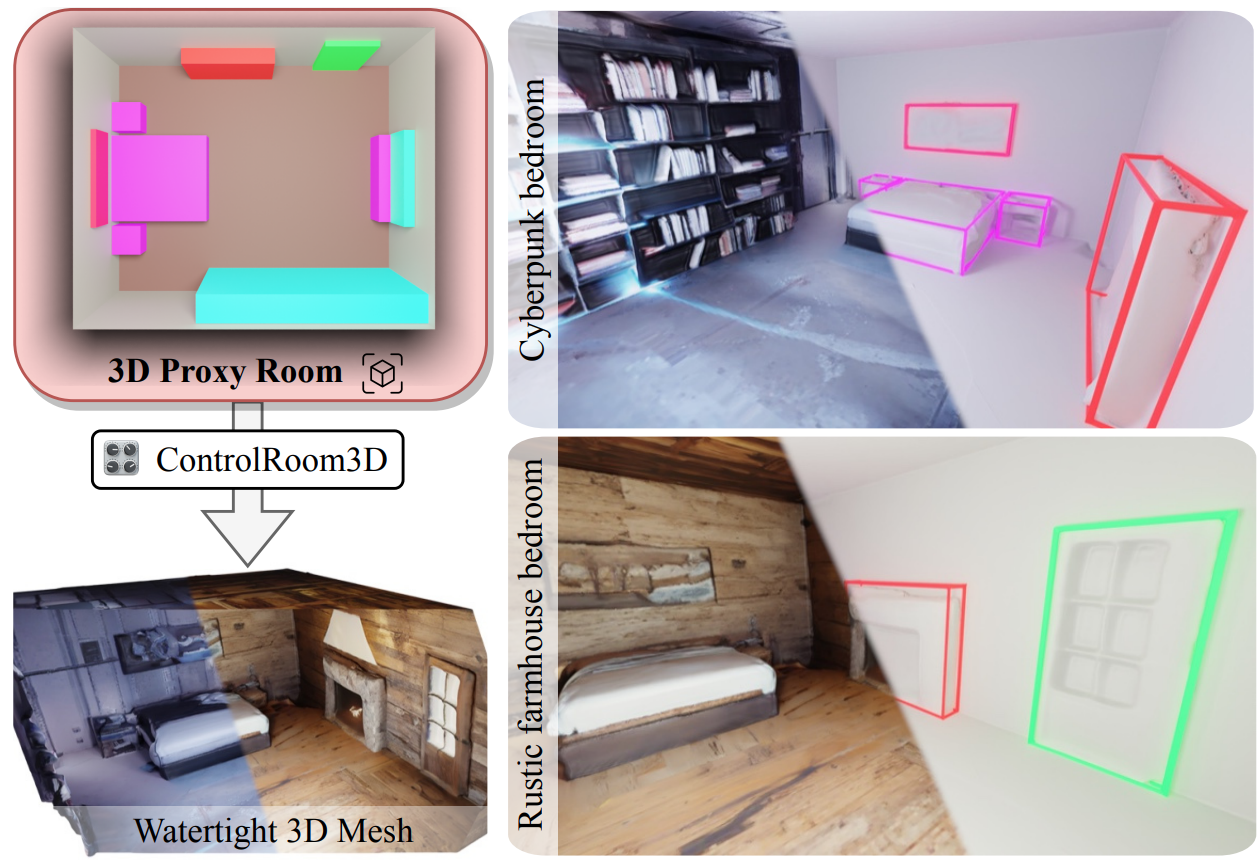

ControlRoom3D: Room Generation using Semantic Proxy Rooms

Jonas Schult, Sam Tsai, Lukas Höllein, Bichen Wu, Jialiang Wang, Chih-Yao Ma, Kunpeng Li, Xiaofang Wang, Felix Wimbauer, Zijian He, Peizhao Zhang, Bastian Leibe, Peter Vajda, Ji Hou CVPR, 2024 project page / arXiv / video ControlRoom3D creates diverse and plausible 3D room meshes aligning well with user-defined room layouts and textual descriptions of the room style. |

|

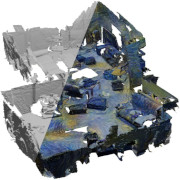

Text2Room: Extracting Textured 3D Meshes from 2D Text-to-Image Models

Lukas Höllein*, Ang Cao*, Andrew Owens, Justin Johnson, Matthias Nießner ICCV, 2023 (Oral Presentation) project page / arXiv / video / code Text2Room generates textured 3D meshes from a given text prompt using 2D text-to-image models. The core idea of our approach is a tailored viewpoint selection such that the content of each image can be fused into a seamless, textured 3D mesh. More specifically, we propose a continuous alignment strategy that iteratively fuses scene frames with the existing geometry. |

|

StyleMesh: Style Transfer for Indoor 3D Scene Reconstructions

Lukas Höllein, Justin Johnson, Matthias Nießner CVPR, 2022 project page / arXiv / video / code We apply style transfer on mesh reconstructions of indoor scenes. We optimize an explicit texture for the reconstructed mesh of a scene and stylize it jointly from all available input images. Our depth- and angle-aware optimization leverages surface normal and depth data of the underlying mesh to create a uniform and consistent stylization for the whole scene. |

Miscellanea |

Talks |

|

Academic Service |

Reviewer for CVPR2023, ICCV2023, SIGGRAPH ASIA 2023, CVPR2024, SIGGRAPH 2024, ECCV2024 (Outstanding Reviewer Award), CVPR2025, ICCV2025, IEEE TVCG |

Teaching |

Advanced Deep Learning for Computer Vision: Visual Computing: Winter 24/25, Summer 25

advised 10+ students in semester-long course projects about neural rendering / novel-view-synthesis 3D Scanning & Motion Capture: Summer 22, Winter 22/23, Summer 23, Winter 23/24, Summer 24 advised 40+ students in 6-week course projects about stereo reconstruction, bundle adjustment, ARAP, and 3D reconstruction Master's Thesis Supervision: advised 4 students on topics about neural style transfer, world generation, and scene editing |

|

Thank you Jon Barron for providing the source code of this website. |